Emerging Research Topics in Engineering (ERTE) – 2021

About ERTE 2021

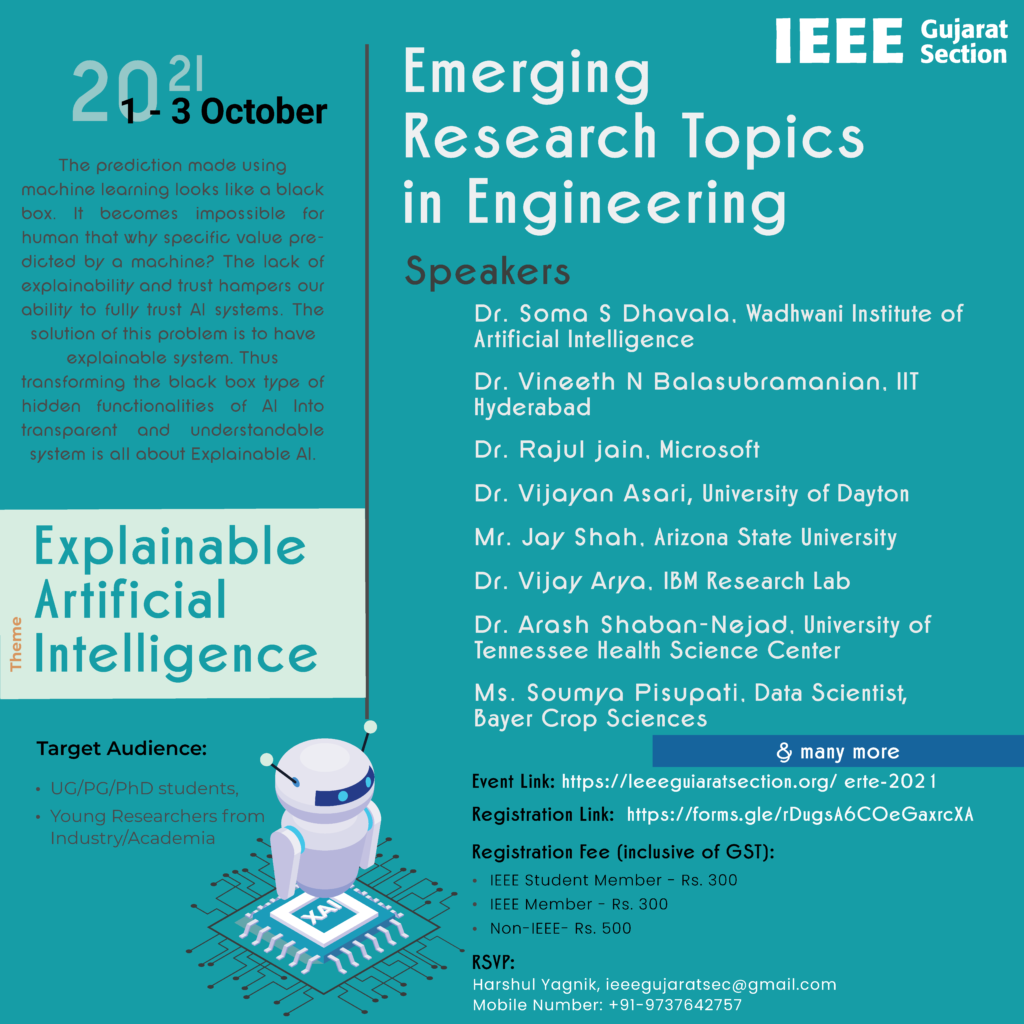

“Emerging Research Topics in Engineering (ERTE)” is the flagship event of IEEE Gujarat Section. This year event is scheduled on October 1-2-3, 2021 in virtual mode with the theme of “Explainable AI (XAI)”. The event is aimed to encourage young researchers, PhD scholars, Master students, early career professionals and faculty members to learn the state-of-the-art of emerging and challenging research areas from eminent speakers around the globe. This may help participants to discover their career path and direct them to identify their own research topics and problem statements.

About IEEE Gujarat Section

IEEE Gujarat Section (https://ieeegujaratsection.org/) (registered u/s 80G) comes under Asia-Pacific Region, the Region 10 of IEEE. The Section is currently equipped with 29 student branches, 10 society chapters and 2 council chapters and three Affinity Groups. The Section continually engages in conducting quality technical events, expert talks, meetings and volunteer development programs.

Theme: Explainable AI (XAI)

Mode: Online (Virtual Event)

Dates: October 1-2-3, 2021

Target audience: IEEE members/ Non-IEEE members/ Industry Professionals / Academia / Young Researchers/

- General Chair

- Maniklal Das

- Program Chair

- Kiran Amin

- Chirag Paunwala

- Organizing Chair

- Ashish Phophalia

- Harshul Yagnik

- Organizing Committee:

- Ankit Dave

- Satvik Khara

- Foram Rajdev

- Sumit Makwana

Dr. Soma Dhavala

Dr. Soma Dhavala

Principal Researcher at Wadhwani AI, Banglore, India

LinkedIn: https://www.linkedin.com/in/somasdhavala/

Google Scholar: https://scholar.google.com/citations?user=Rkh1zb8AAAAJ&hl=en

Read More...

Bio: Dr. Soma Dhavala is Principal Researcher at Wadhwani AI, Banglore, India. He is Founder of mlsquare.org and co-founder of Vital Ticks Pvt. Ltd. He has vast experience in research at companies like ilimi.in, Dow Agrosciences, GE global Research and volunteering as teacher at Purnapramati, Banglore. He did his PhD in statistics at Texas A&M University from 2005 to 2010, M.S. in Electrical Engineering from Indian Institute of Technology, Madras from 1997 to 2000, B. S. in Electronics Communication from Andhra University.

Title of Talk: All explanations are right — only some are useful

Dr. Vijayan K. Asari

Dr. Vijayan K. Asari

Professor, University of Dayton, Ohio, USA

Home Page: https://udayton.edu/directory/engineering/electrical_and_computer/asari_vijayan.php

Google Scholar: https://scholar.google.com/citations?user=JLhA4-8AAAAJ&hl=en

LinkedIn: https://www.linkedin.com/in/vijayan-asari-6371b822

Read More...

Bio: Dr. Vijayan K. Asari is a Professor in Electrical and Computer Engineering and Ohio Research Scholars Endowed Chair in Wide Area Surveillance at the University of Dayton, Dayton, Ohio, USA. He is the director of the Center of Excellence for Computational Intelligence and Machine Vision (Vision Lab) at UD. As leaders in innovation and algorithm development, UD Vision Lab specializes in object detection, recognition and tracking in wide area surveillance imagery captured by visible, infrared, thermal, LiDAR (Light Detection and Ranging), and SAR (Synthetic Aperture Radar) sensors. Dr. Asari’s research activities also include development of novel algorithms for 3D scene creation and visualization from 2D video streams, automatic visibility improvement of images captured in various weather conditions, human identification, human action and activity recognition, and brain signal analysis for emotion recognition and brain machine interface.

Dr. Asari received his BS in electronics and communication engineering from the University of Kerala, India in 1978, M Tech and PhD degrees in Electrical Engineering from the Indian Institute of Technology, Madras in 1984 and 1994 respectively. Prior to joining UD in February 2010, Dr. Asari worked as Professor in Electrical and Computer Engineering at Old Dominion University, Norfolk, Virginia for 10 years. Dr. Asari worked at National University of Singapore during 1996-98 and led a research team for the development of a vision-guided micro-robotic endoscopy system. He also worked at Nanyang Technological University, Singapore during 1998-2000 and led the computer vision and image processing related research activities in the Center for High Performance Embedded Systems at NTU.

Dr. Asari holds four patents and has published more than 700 research papers, including an edited book on wide area surveillance and 118 peer-reviewed journal papers in the areas of computer vision, image processing, pattern recognition, and machine learning. Dr. Asari has supervised 30 PhD dissertations and 45 MS theses during the last 20 years. Currently several graduate students are working with him in different sponsored research projects. Dr. Asari is participating in several federal and private funded research projects and he has so far managed around $25M research funding. Dr. Asari received several awards for teaching, research, advising and technical leadership. He is an elected Fellow of SPIE and a Senior Member of IEEE, and a co-organizer of several SPIE and IEEE conferences and workshops.

Title of Talk: Emotion Recognition by Spatiotemporal Analysis of EEG Signals

Emotion recognition by analyzing electroencephalographic (EEG) recordings is a growing area of research. EEG can detect neurological activities and collect data representing brain signals without the need for any invasive technology or procedures. EEG recordings are found useful for the detection of emotions through monitoring the characteristics of spatiotemporal variations of activations inside the brain. Specific spectral descriptors as features are extracted from EEG data to quantify the spatiotemporal variations to distinguish different emotions. Several features representing different brain activities are estimated for the classification of emotions. An experimental setup has been established to elicit distinct emotions (joy, sadness, disgust, fear, surprise, neutral, etc). EEG signals are collected using a 256-channel system, preprocessed using band-pass filters and Laplacian montage, and decomposed into five frequency bands using Discrete Wavelet Transform. The decomposed signals are transformed into different spectral descriptors and are classified using a multilayer perceptron neural network. Logarithmic power descriptor produced the highest recognition rates of around 92 to 95 percentage in different experiments.

A brain machine interface using EEG data facilitates the control of machines through the analysis and classification of signals directly from the human brain. A 14-electrode headset of Emotiv Systems is used to capture EEG data, which is then classified and encoded into signals for controlling a 7-degree-of-freedom robotic arm. The collected EEG data is analyzed by an independent component analysis based feature extraction methodology and classified using a multilayer neural network classifier into one of several control signals: lift, lower, rotate left, rotate right, open, close etc. The system also collects the data of electromyography signals indicative of movement of the facial muscles. A personal set of EEG data patterns is trained for each individual. Research work is progressing to extend the range of controls beyond a few discrete actions by refining the algorithmic steps and procedures.

Dr. Vineeth N Balasubramanian

Dr. Vineeth N Balasubramanian

Associate Professor, IIT Hyderabad, India

LinkedIn: https://www.linkedin.com/in/vineethnb/

Google Scholar: https://scholar.google.com/citations?user=7soDcboAAAAJ&hl=en

Read More...

Bio: Dr. Vineeth N Balasubramanian is Associate Professor, Department of Computer Science and Engineering, Head, Department of Artificial Intelligence, Indian Institute of Technology, Hyderabad. He was associated with Arizona State University in various teaching and research roles and served Oracle as an Application Engineer. He did his PhD in Computer Science at Arizona State University from 2005 to 2010, M. Tech in Computer Science and M.Sc. in Mathematics at Shri Sathya Sai Institute of Higher Learning from 2001 to 2003 and 1999 to 2001 respectively.

Title of Talk: Towards Explainable and Robust AI Practice

The last decade has seen rapid strides in Artificial Intelligence (AI) moving from being a fantasy to a reality that is a part of each one of our lives, embedded in various technologies. A catalyst of this rapid uptake has been the enormous success of deep learning methods for addressing problems in various domains including computer vision, natural language processing, and speech understanding. However, as AI makes its way into risk-sensitive and safety-critical applications such as healthcare, aerospace and finance, it is essential for AI models to not only make predictions but also be able to explain their predictions, and be robust to adversarial inputs. This talk will introduce the audience to this increasingly important area of explainable and robust AI, as well as describe some of our recent research in this domain including the role of causality in explainable AI, as well as the connection between explainability and adversarial robustness.

Read More...

Bio. Arash Shaban-Nejad is the Director of Population Health Intelligence (PopHI) lab and an Associate Professor in the UTHSC-OAK-Ridge National Lab (ORNL) Center for Biomedical Informatics, and the Department of Pediatrics at the University of Tennessee Health Science Center (UTHSC). Before coming to UTHSC, he was a Postdoctoral Fellow of the McGill Clinical and Health Informatics Group at McGill University. Dr. Shaban-Nejad received his Ph.D. and MSc in Computer Science from Concordia University (AI and Bioinformatics), Montreal, and Master of Public Health (MPH) from the University of California, Berkeley. Additional training was received at the Harvard School of Public Health. His primary research interest is Population Health Intelligence, Precision Health and Medicine, Epidemiologic Surveillance, Semantic Analytics and Explainable Medicine using tools and techniques from Artificial Intelligence, Knowledge Representation, Semantic Web, and Data Science. His research has been supported by several research grants from Canada Institute for Health Research (CIHR), National Institute of Health (NIH)/National Cancer Institute (NCI), the Gates Foundation, Microsoft Research, and Memphis Research Consortium (MRC).

Title of Talk: Explainable AI-powered Clinical and Population Health Decision Making

Dr. Vijay Arya

Dr. Vijay Arya

Senior Researcher at IBM India Research Lab (IRL), Bangalore

Read More...

Bio: Vijay Arya is a Senior Researcher at IBM Research India and part of IBM Research AI group where he works on problems related to Trusted AI. Vijay has 15 years of combined experience in research and software development. His research work spans Machine learning, Energy & smart grids, network measurements & modeling, wireless networks, algorithms, and optimization. His work has received Outstanding Technical Achievement Awards, Research Division awards, & Invention Plateau Awards at IBM, and has been deployed by power utilities in the USA. Before joining IBM, Vijay worked as a researcher at National ICT Australia (NICTA) and received his PhD in Computer Science from INRIA, France, and a Masters from Indian Institute of Technology (IIT) Delhi. He has served on the program committees of IEEE, ACM, and IFIP conferences, he is a senior member of IEEE & ACM, and has more than 60 conference & journal publications and patents.

Title of Talk: One Explanation Does Not Fit All: A Toolkit and Taxonomy of AI Explainability Techniques

Er. Jay Shah

Er. Jay Shah

Research Scholar, Arizona State University

Home Page: public.asu.edu/~jgshah1/

Read More...

Jay is a Ph.D. student at Arizona State University supervised by Dr. Teresa Wu and Dr. Baoxin Li. Drawing upon the realms of biomedical informatics, computer vision, and deep learning, his research interests are in developing novel and Interpretable AI models for biomarker discovery and early detection of neurodegenerative diseases including Alzheimer’s and Post Traumatic Headache. Prior to pursuing a Ph.D., he worked with Nobel Laureate Frank Wilczek, interned at NTU-Singapore, HackerRank-Bangalore, and graduated from DAIICT, India. Jay also hosts an AI podcast (80,000+ downloads) where he has invited Professors, Scientists, Reporters, and Engineers working on different realms of research and applications of Machine Learning.

Read More...

Bio:

Mehul S Raval is an Associate Dean – Experiential Learning and Professor at the Ahmedabad University. His research interests are in computer vision and engineering education. He obtained Bachelor’s degree (ECE) in 1996, Master’s degree (EC) in 2002, and Ph.D. (ECE) in 2008 from College of Engineering Pune / University of Pune, India. He has 24+ years of experience as an academic with visits to the Okayama University, Japan, under Sakura Science fellowship, Argosy visiting associate professor at Olin College of Engineering, the US, during Fall 2016, and visiting Professor at Sacred Heart University, CT, in 2019.

He publishes in journals, magazines, conferences, and workshops and reviews papers for leading publishers – IEEE, ACM, Springer, Elsevier, IET, SPIE. He has received research funds from the Board of Research in Nuclear Science (BRNS), and the Department of Science and Technology, Government of India. He is supervising Doctoral, M.Tech, and B.Tech students and serves on doctoral committees.

He chairs programs and volunteers on the technical program committee for conferences, workshops, and symposiums. He also serves on the Board of Studies (BoS) to develop Engineering curriculum for various Universities in India. He is a senior member of IEEE, Fellow of IETE, and Fellow of The Institution of Engineers (India). Dr. Raval served IEEE Gujarat section during 2008 – 2015, 2018 – 2020 as a Joint Secretary. He also served IEEE signal processing society (SPS) chapter – IEEE Gujarat Section as vice chair and exe-com member in 2014. Currently he is serving IEEE Computational Intelligence Society Chapter – IEEE Gujarat Section as Chair.

Title of Talk: Attacks and defense against the adversarial examples for CNNs

Convolutional Neural Network (CNN) is extremely susceptible to the adversarial example. They are imperceptible patterns that fool CNN and result in its failure to correctly classify or recognise them . The addition of adversarial noise to images, videos or speech files is targeted in such a manner that CNN produces a wrong result. One can poison the database by adding the adversarial example in the training database or can tamper with the physical world object so that CNN fails to correctly classify it. The examples of the systems which can be attacked with mal-intentions are face recognition systems, autonomous cars. For example in the physical world; patches on the stop sign result in failure of CNN and may cause an accident. The attacks can be classified as white box and black box depending on the amount of available information. In whilte box attack complete information about the CNN architecture and related parameters are available to the attackers, while in the black box attack no information is available to the attacker. The talk will review some of the state of the art attacks. The defense against the adversarial examples can be addressed by the following;1. during CNN learning phase by giving adversarial training, gradient hiding or blocking the transferability; 2. One can also design robust CNN by adjusting architecture to immunize the adversarial noise; 3. The use of preprocessing filters to remove adversarial noise; 4. detection of adversarial examples through feature squeezing. One of the defense techniques is to detect adversarial images by observing the outputs of a CNN-based system when noise removal filters are applied. Such operation-oriented characteristics enable us to detect the adversarial example. In this talk, I will show state-of-the-art techniques for attacks and defence adversarial examples.

Emerging Research Topics in Engineering 2021

|

|||||

October 1, 2021 |

|||||

| Industry/Academia | Topics of Session | Speaker Name | Designation of Speaker | Session Start Time | Session End Time |

| Inauguration | 4.30PM | 4.45PM | |||

| Academia | Towards Explainable and Robust AI Practice | Dr. Vineeth N Balasubramanian | Associate Professor, IIT Hyderabad, India |

4.45PM | 6.00 PM |

| Academia | Explainable AI-powered Clinical and Population Health Decision Making | Dr. Arash Shaban-Nejad | Associate Professor, University of Tennessee Health Science Center, USA | 6.15 PM | 7.30 PM |

October 2, 2021 |

|||||

| Industry | All explanations are right — only some are useful | Dr. Soma S. Dhavala | Principal Researcher, The Wadhwani Institute of Artificial Intelligence, India |

4.00PM | 5.15PM |

| Academia | Emotion Recognition by Spatiotemporal Analysis of EEG Signals | Dr. Vijayan Asari | Professor, University of Dayton, USA |

5.30 PM | 6.45PM |

| Academia | Landscape of Interpretable AI, its Limitations and a glance at SHAP | Mr. Jay Shah | Research Scholar, Arizona State University, USA |

7.00PM | 8.15 PM |

October 3, 2021 |

|||||

| Industry | One Explanation Does Not Fit All: A Toolkit and Taxonomy of AI Explainability Techniques | Dr. Vijay Arya | Senior Researcher, IBM Research Lab, India |

2.00PM | 3.15PM |

| Academia | Attacks and defense against the adversarial examples for CNNs | Dr. Mehul Raval | Associate Dean – Experiential Learning and Professor, Ahmedabad University |

3.30PM | 4.45 PM |

| Valedictory and Participant Feedback | 4.45PM | 5.00PM | |||

| Participant Category | Registration Fee # |

| IEEE Student Members * | 300 /- INR |

| IEEE Professional Members * | 300/ – INR |

| Non-IEEE | 500/ – INR |

#Registration fee includes GST.

*Upload valid IEEE membership card

(IEEE membership card can be downloaded from https://www.ieee.org/profile/membershipandsubscription/printMembershipCard.html )

Mode of Payment: NEFT or IMPS or UPI

Registration Link: Click here to Register

Registration Account Details

Bank Account Name: IEEE Gujarat Section

Bank Account Number: 10307643269

Bank Name: State Bank of India

Bank Branch: SBI, Infocity Branch

Address : Gr Floor, Infocity, Gandhinagar

Branch code: 012700

IFSC Code: SBIN0012700

MICR Code: 380002151

Digital version of the participation certificate will be provided to every participant.

For any queries contact: Harshul Yagnik, Email: ieeegujaratsec@gmail.com, Mobile: +91-9737642757